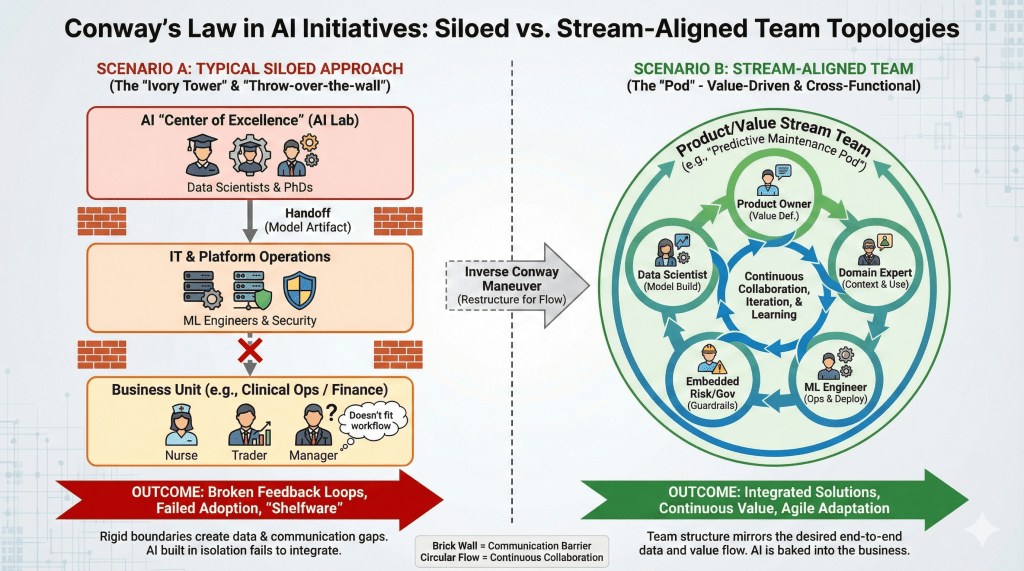

For decades, Melvin Conway’s observation has haunted software architecture: organizations design systems that are constrained to produce designs that are copies of the communication structures of these organizations. In the era of Artificial Intelligence, this law is not just an architectural constraint; it is a strategic ceiling.

Across North American boardrooms—from Bay Street banks to major healthcare networks—we are seeing a distinct pattern. AI initiatives are failing to scale not because of model inaccuracy or compute shortages, but because the underlying organizational topology fragments the very data and workflows the AI is meant to unify. Your AI will not fix your silos; it will learn them.

Key Insights: When the Org Chart Becomes the Architecture

1. The Data Sovereignty Trap (Financial Services) In large financial institutions, risk, fraud, and marketing often operate as sovereign states. Conway’s Law dictates that if these teams do not share a fluid communication structure, neither will their data.

- The Scenario: A Tier-1 bank builds a “Customer 360” AI to predict churn. However, the ‘Credit Card’ division and the ‘Mortgage’ division have separate data governance councils that rarely meet.

- The Result: The AI model is trained primarily on checking account activity because that data was “easiest to reach.” The model flags a high-net-worth mortgage client as a churn risk simply because they don’t use their debit card, leading to an embarrassing retention call. The AI learned the org chart, not the customer.

2. The “Ivory Tower” Lab (Healthcare & Pharma) Many organizations attempt to bypass bureaucracy by establishing a centralized “AI Center of Excellence” (CoE) comprised of PhDs and data scientists, physically and structurally removed from operations.

- The Scenario: A healthcare network creates an AI Lab to build a sepsis-prediction tool. The data scientists achieve 98% accuracy in the lab. However, they have no daily interaction with the nursing staff or the IT team managing the Electronic Health Record (EHR) workflows.

- The Result: The model works, but the deployment fails. The alert system doesn’t fit into the nurses’ triage protocol, and the IT team blocks the integration due to security protocols the Lab ignored. The “perfect” model sits on a shelf because the team structure isolated the builders from the users.

3. The Feature Bolt-On (Education & EdTech) In established software and education companies, AI is often treated as a feature to be added by a separate “AI Team” rather than a core transformation of the product.

- The Scenario: An EdTech company wants to add “AI tutoring” to its Learning Management System (LMS). The core product team focuses on stability and uptime, while a separate AI team builds the tutor.

- The Result: The AI tutor is deployed as a floating widget that doesn’t understand the curriculum context stored in the core LMS. Students get generic answers that contradict their coursework. The architecture reflects the divide between “Product Maintenance” and “AI Innovation” teams.

Strategic Actions for Executives

1. Execute the “Inverse Conway Maneuver” Stop trying to force collaboration across rigid silos. Instead, restructure your teams to mirror the AI architecture you want.

- The Action: If you want a predictive maintenance system that integrates with your supply chain, do not keep Data Science and Logistics in separate buildings. Form a “Stream-Aligned Team” that includes a Data Scientist, an ML Engineer, a Logistics Manager, and a User Experience designer.

- Why it works: By co-locating these roles (virtually or physically), you force the communication pathways that allow data to flow the way the application needs it to.

2. Shift from “Project” to “Product” Funding Projects have end dates; AI products require continuous learning. Conway’s Law thrives in project-based environments where teams assemble and disband, leaving “orphaned” code.

- The Action: Move to a product-operating model where the team owns the AI lifecycle forever (or until retirement). This forces the team to prioritize long-term “MLOps” (Machine Learning Operations) over short-term “model accuracy,” preventing the brittle “throw-it-over-the-wall” architecture common in legacy IT.

3. Embed Governance, Don’t Gatekeep It In highly regulated industries (Finance, Health), Compliance is often a final “gate” at the end of the process.

- The Action: Embed a compliance or risk officer into the AI product team as a part-time member.

- Why it works: This ensures that “Responsible AI” guardrails are baked into the architecture from Day 1, rather than applied as a clumsy patch at the end—a direct application of designing the team to match the desired system reliability.

This article was written with the assistance of my brain, Google Gemini, ChatGPT, and other wondorous toys.

AI won’t fix silos, it will just learn them.

The org chart becomes the architecture.

Teams first, models second. That’s the real unlock